The Agentic Sandbox: Infrastructure for Safe AI

Frank Kilcommins

Estimated read time: 8 min

Last updated: October 13, 2025

TL;DR Jentic’s agentic sandbox lets enterprises test and validate AI agents safely by simulating real APIs and data systems. It turns experimentation into audited, reusable workflows so AI can move from testing to production with structure, trust, and control.

The Agentic Sandbox: Infrastructure for Safe, Structured AI Transformation

A simulated environment mirrors internal APIs and data systems, letting teams test agents safely, identify high-value opportunities, and promote only validated workflows to production.

That short description captures the idea behind Jentic’s agentic sandbox. But beneath its simplicity lies a new architectural layer that is essential for enterprises preparing to adopt agentic AI responsibly.

AI systems are moving from understanding language to taking action. They plan, decide, and execute tasks across APIs and data systems. For enterprises with decades of infrastructure, that power is both promising and perilous. The challenge is not building agents that can act, but enabling them to act safely inside environments where trust, governance, and predictability matter.

The agentic sandbox is where that happens. It is where enterprises learn how to use agents without risk, where reasoning is observed, workflows are discovered and refined, and where AI becomes measurable, auditable, and ready for production.

Sandboxes are Non-Negotiable for Safe Agency

Enterprise demand for agentic capabilities has arrived sooner than anyone expected, but most organizations aren’t yet equipped with the ability to deploy them safely. Those exploring AI face a common tension point: innovation demands freedom, but operations demand control. Agents introduce a new level of autonomy. They reason about goals and take initiative, but they can also make unpredictable decisions that break integrations or expose sensitive data. Moving from prediction into action is harder than it sounds, and is what’s stalling most return on investment in what McKinsey has called “agentic business transformation”.

In consumer applications, experimentation can be tolerated. In an enterprise environment, it cannot. Systems span critical processes, regulated data, and years of operational dependencies. In an agentic construct, agents that reason freely without structure can easily overstep. One poorly reasoned API call can cascade into compliance, cost, or security issues.

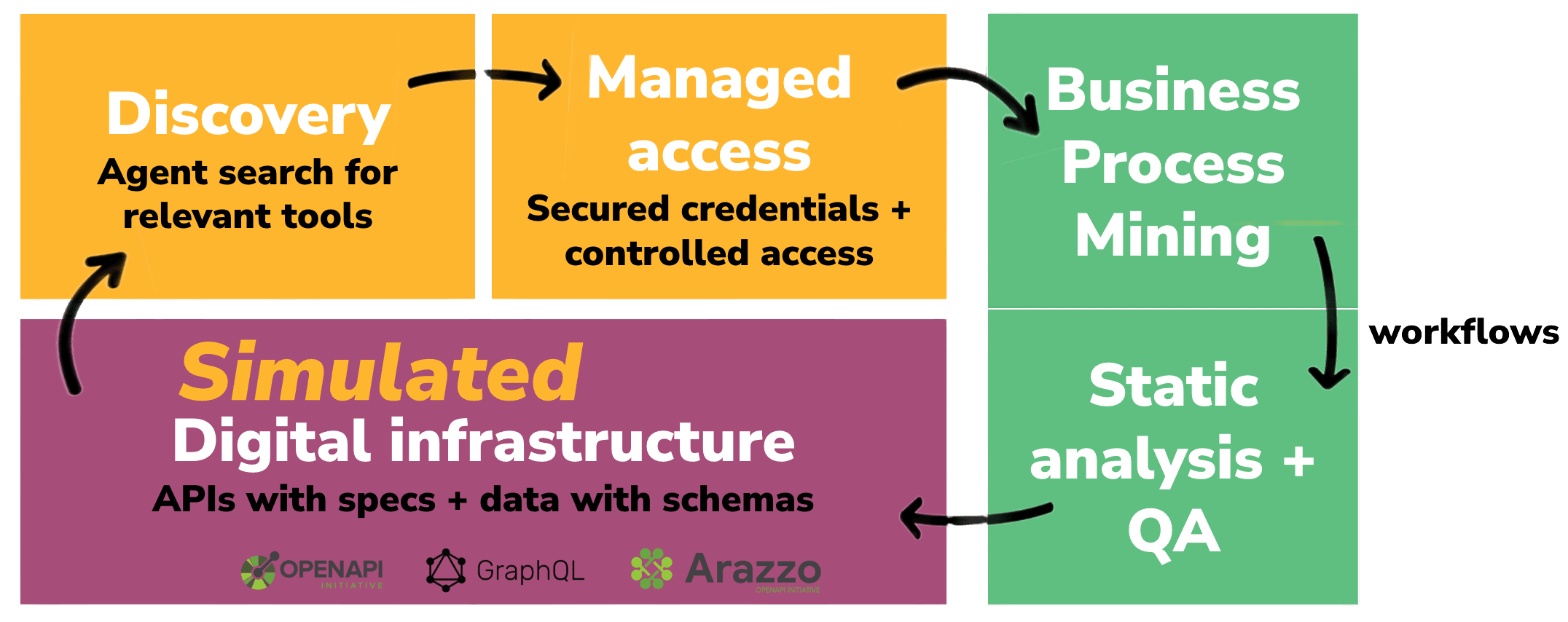

Enterprises are learning that the problem is not intelligence; it is integration and business logic. They need a way to validate and govern how LLM reasoning strategies turn into action. Agentic sandboxes become the architectural bridge that lets enterprises move from curiosity into control. They mirror the organization’s digital environment (APIs, schemas, authentication flows, and data interfaces) but replace live systems with safe, simulated equivalents. Within this environment, teams can test agents on synthetic data, observe their behavior, and identify where reasoning leads to value and where it leads to risk, before anything reaches production.

This is what I call safe agency: giving agents the ability to explore, learn, and act, but within explicit guardrails that protect the business.

Inside the Sandbox: Safe Agency by Design

In practice, the agentic sandbox is a living digital twin of an enterprise’s API and data landscape. It contains the same authentication flows, schema structures, and integration points, but nothing inside it can cause harm. No one gets fired for safe experimentation in the sandbox! It is a complete simulation layer where agents can plan, reason, and execute as if they were live on production.

Within this environment, every agent action is observed, every call is logged, every action traceable, every success or failure able to harden the next steps. When an agent completes a task successfully, the sandbox records that behavior as a validated workflow; a clear, auditable sequence of steps that achieved the desired outcome. When an agent fails, the trace reveals exactly where reasoning or access control went wrong. The system learns from both. Only validated workflows graduate from simulation to production.

Safe agency is not about restricting AI. It is about giving it structure and feedback. Agents gain the freedom to experiment, but they do so under supervision. Human-in-the-loop validation ensures that only proven, high-value patterns are promoted. Over time, this creates a growing library of trusted workflows that form the backbone of an enterprise’s discoverable, intent-based agentic capability.

Workflows: The True Unit of Enterprise Intelligence

Enterprises succeed not because of individual agents, but because of the workflows they capture. Every time an agent completes a task correctly, it reveals a repeatable pattern of logic: a recipe for success. As McKinsey also mentioned in their key elements of agentic AI deployment “It’s not about the agent; it’s about the workflow”.

Jentic’s agentic sandboxes automatically convert these validated patterns into Arazzo workflows. Arazzo is an open, declarative standard that describes how business outcomes are achieved through APIs. Each workflow becomes a reusable, portable document that defines the sequence of API interactions, parameters, and dependencies required to perform a specific function.

This approach turns exploration into structured knowledge. The sandbox is not just a testing space; it is a workflow discovery engine. Over time, it evolves into a repository of organizational business logic; a source of truth that captures how the enterprise operates in machine-readable form.

When future agents need to perform similar tasks, they no longer have to reason from scratch. They can discover and reference these validated workflows, reducing cost, latency, and error. Experimentation gives way to efficiency.

Observing Reality: Learning from Production

Enterprises already have valuable knowledge embedded in their existing systems. By connecting the agentic sandbox to production telemetry and API logs, it can observe real usage patterns and translate them into workflows automatically.

This capability transforms production data into intelligence, revealing actual API portfolio consumption. It exposes how APIs are actually used; the sequences of API calls that deliver business outcomes every day. These are codified into Arazzo workflows and added to the organization’s repository, creating a living model of enterprise behavior.

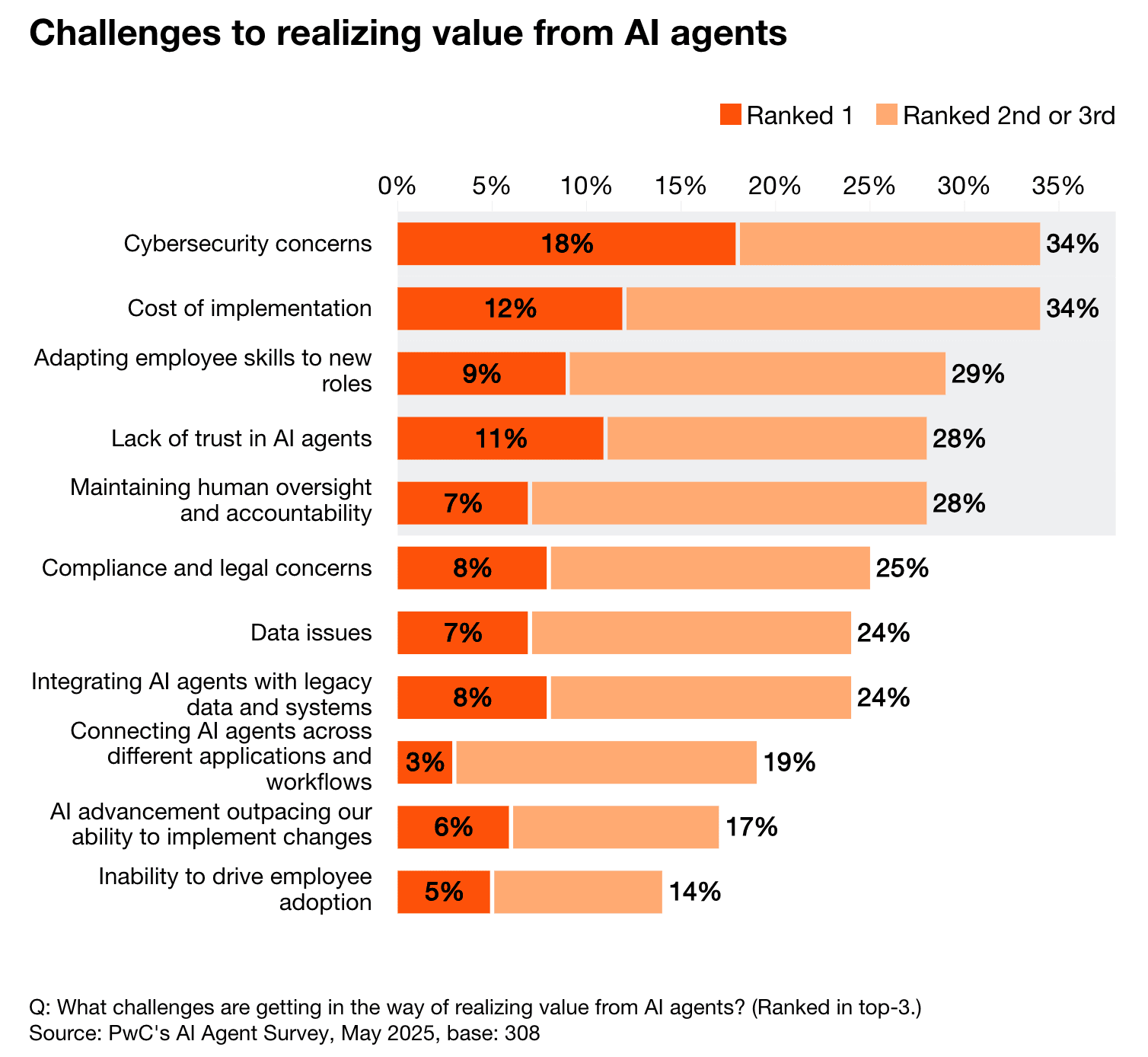

Observation also strengthens security. When agents or applications deviate from expected patterns, the sandbox flags those anomalies immediately. Rogue consumption or policy violations can be isolated and remediated before they spread. The same mechanism that powers discovery also enforces compliance and thus tackling one of the major concerns for organisations today.

The result is a continuously improving feedback loop between reality and simulation: the agentic sandbox learns from production, and production becomes safer because of what the sandbox learns - the feedback flywheel between reality and simulation.

Efficiency, Interoperability, and Sovereignty

Enterprises cannot afford to treat AI as an endless reasoning engine. Every redundant decision burns compute, tokens, and energy. Once the intent is known and matched to a workflow, it should run deterministically. The workflow becomes the core enterprise IP, the differentiating value on offer.

Jentic’s sandboxes capture and codify those known paths so they can be discovered and executed efficiently. Agents can focus on reasoning only where reasoning adds value, not where the answer is already understood. This creates a measurable reduction in resource use while improving performance consistency.

Equally important is how this knowledge is represented. When workflows are your core IP, it becomes vital that they aren't locked into someone else's proprietary standard. This is why Arazzo is important as an open standard! That is what drove me, as a contributor to the OpenAPI Initiative, to help develop and launch the Arazzo as an independent specification. It gives organizations sovereignty over their data and business logic. Workflows can be exported, inspected, and integrated anywhere; across internal platforms, partner ecosystems, or regulatory environments. The enterprise retains control over what the AI knows and how that knowledge is shared (with or without Jentic!).

Interoperability ensures longevity. Sovereignty ensures trust. Together, they make the agentic transformation sustainable.

The Continuous Feedback-Flywheel of Safe Innovation

Once established, agentic sandboxes operate as a continuous engine of improvement.

- Agents explore and attempt to solve tasks using simulated systems.

- Their actions are observed, analyzed, and validated.

- Successful behaviors become reusable Arazzo workflows.

- Those workflows enrich the enterprise knowledge base.

- Future agents leverage that knowledge, reasoning less and executing more.

- Production telemetry feeds back new insights, and the cycle repeats.

For enterprises on the agentic business transformation journey, this process provides a controlled and measurable on-ramp to agentic automation. For AI-native companies, it offers an always-on environment for continuous discovery and workflow refinement. In both cases, innovation and governance move together.

When workflows are ready, they transition seamlessly from sandbox to production through Jentic’s managed execution layer, complete with authentication/authorization (or you bring your own), observability, and auditability. The result is a closed loop of experimentation, validation, and deployment: a system that gets safer and smarter with every iteration, continually reinforcing enterprise standards.

Building the Infrastructure for Predictable AI

To paraphrase from Mckinsey - Agentic transformation is no longer optional. The organizations that succeed will be those that can harness AI’s autonomy without surrendering control.

We believe the agentic sandbox is where that balance is achieved. It lets enterprises explore the frontier of AI safely, discover and codify their operational logic, and preserve sovereignty over the workflows that define their business. It turns experimentation into evidence, and evidence into execution.

The future of enterprise AI will not be defined by larger models, but by better systems; systems that can learn responsibly, reuse intelligently, and operate predictably. It is how enterprises move from curiosity to control, from reasoning to reliability, and from experiments to outcomes they can trust.